Why I Did This

Imagine being able to measure someone's heart rate just by analyzing a minute-long video of their face—no wearables, no wires, just pixels. That’s what remote photoplethysmography (rPPG) enables. But when applied to diverse populations, especially in variable lighting or motion-heavy environments, traditional methods falter.

So I asked: Can we push this boundary using modern deep learning and signal amplification? Spoiler alert—yes, we can.

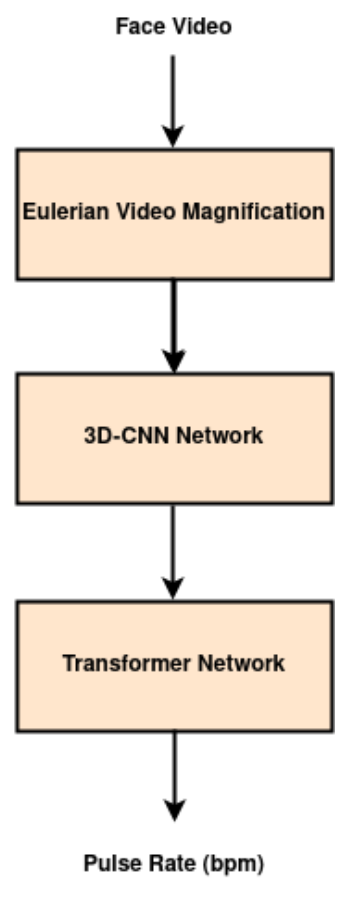

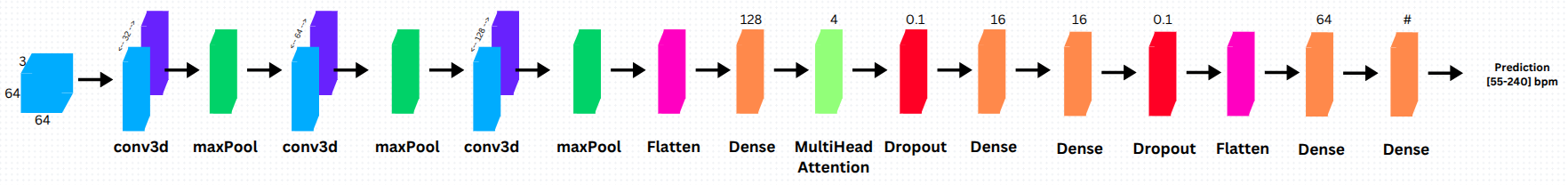

The Hybrid Stack: EVM + 3D CNN + Transformers

To tackle the noise and sensitivity issues in conventional rPPG, I built a pipeline that uses:

- Eulerian Video Magnification (EVM) to enhance faint color changes related to blood flow.

- 3D CNNs to extract rich spatial-temporal features from preprocessed video.

- Transformers to capture long-range temporal dependencies using self-attention.

This combination bridges the gap between low-level pixel changes and high-level signal understanding—especially important when dealing with diverse skin tones and non-laboratory settings like those seen in the Indian population.

Building the Dataset

We recorded one-minute facial videos of 39 Indian participants using standard smartphone cameras. Simultaneously, we collected ground truth pulse and respiratory data using fingertip pulse oximeters. The videos were normalized to 64x64 resolution and trimmed to 300 frames each. Data diversity was key—we intentionally collected from participants under varied lighting and environmental conditions to simulate real-world deployment.

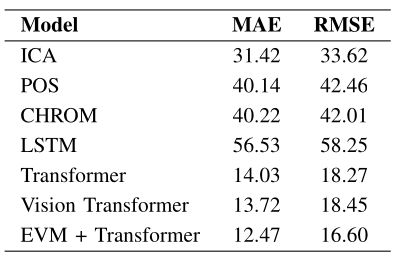

What Worked (and What Didn’t)

✅ EVM drastically improved the signal-to-noise ratio in challenging settings.

✅ Transformers handled temporal dynamics better than traditional LSTMs or CNNs.

✅ Our final model achieved an MAE of 12.47 bpm, significantly outperforming baselines.

❌ Early models without EVM struggled with generalization.

❌ Pure CNN/RNN-based pipelines lacked robustness to facial variations and motion.

The Bigger Picture

This research opens doors beyond just pulse rate estimation. For instance, I’ve begun extending this work to active nostril detection—using the same amplified and temporally modeled video data to study respiratory flow patterns. Applications range from sleep apnea monitoring to yoga training analytics.

Looking Ahead

Here’s where I see this going:

- Real-time Implementation: On-device inference using TensorRT or ONNX for mobile applications.

- Respiration Monitoring: Expanding the current system to detect nostril-level airflow patterns.

- Inclusivity by Design: Adapting models for global populations, starting with the Indian demographic.

🎯 “The future of healthcare isn’t wearable—it’s invisible.”

This project is my small contribution toward that invisible, accessible, and intelligent future.